Artificial intelligence continues to inch closer to human-level versatility—and OpenAI is making bold claims about its latest model, GPT-5. According to statements from the company, GPT-5 is now performing on par with humans across a variety of professional tasks, spanning multiple industries and job types. This represents a significant moment in the evolution of AI models: not just improving benchmarks, but claiming capability at real work.

In this article, we break down what those claims mean, examine the evidence, discuss the potential impact on labor markets, consider the limitations, and explore how industries might adapt to this new reality.

What OpenAI Is Claiming

OpenAI’s public statements suggest that GPT-5 exhibits human-level performance in a broad range of job functions. These include analytical tasks such as drafting legal documents, coding, creating design plans, writing technical content, and even some creative and advisory roles. The company frames this not as a replacement of human professionals but as a shift in how value is produced and shared.

OpenAI emphasizes that GPT-5’s performance is not just measured in narrow benchmarks, but in “authentic work deliverables”—like drafting proposals, engineering schematics, or professional memos. The implication: GPT-5 is capable of generating outputs that would be considered acceptable, or even competitive, in many job settings.

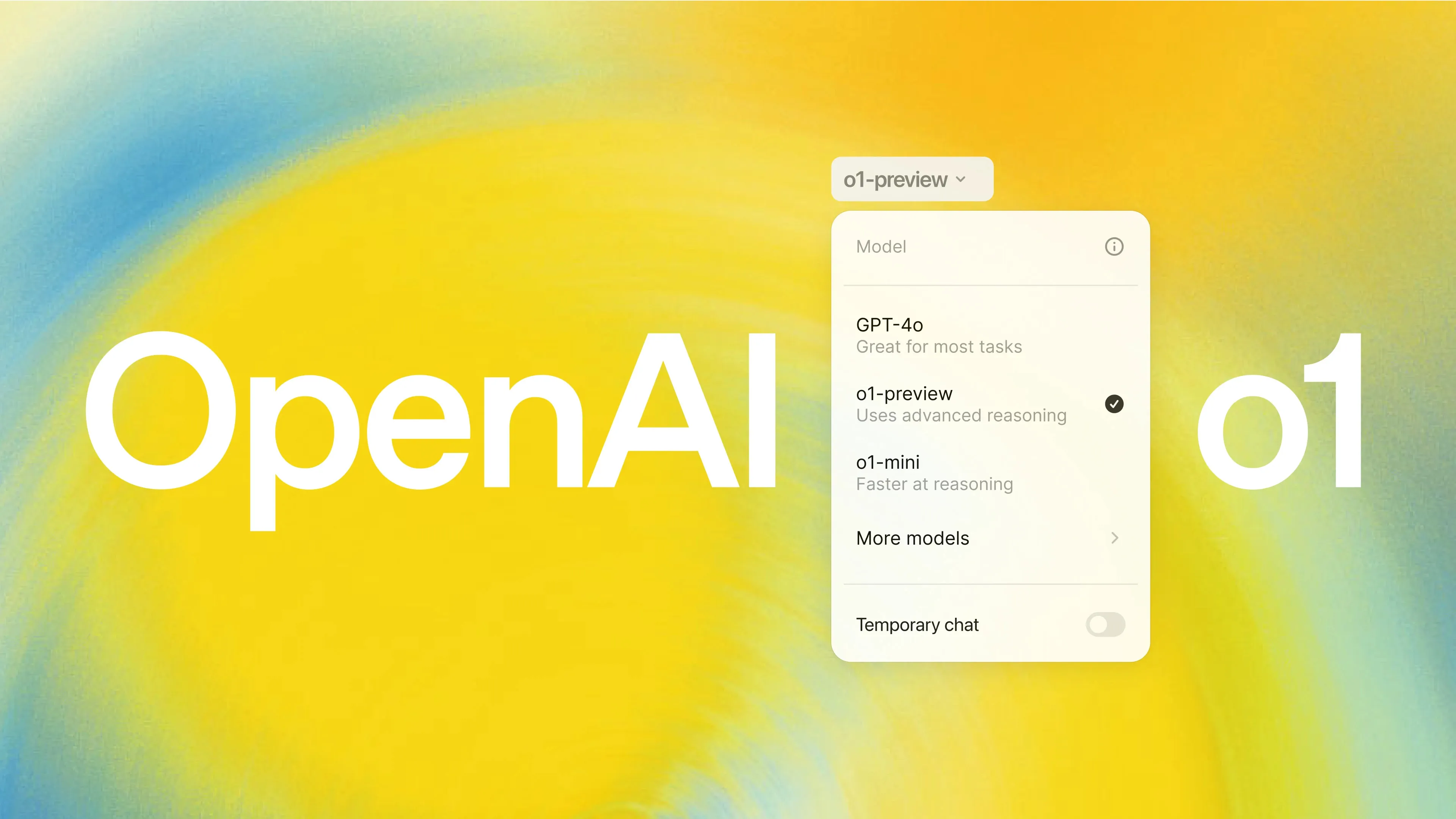

Furthermore, OpenAI highlights that the leap from prior models—especially from GPT-4 or hybrid reasoning models—to GPT-5 is substantial. The company suggests GPT-5 is more consistent, less prone to hallucinations (fabricated facts), and more adaptable across domains.

Evidence & Evaluation: How Realistic Are the Claims?

Benchmark vs Real Work

One challenge in assessing these claims is distinguishing performance on benchmarks from performance in real operational settings. Benchmarks simulate tasks under controlled conditions, but real jobs involve unpredictable context, domain knowledge, interpersonal nuance, and accountability.

OpenAI counters this by emphasizing its use of “authentic deliverables”—tasks that mimic real professional work. Still, independent verification and domain-specific audits will be required to validate performance claims across industries.

Comparing GPT-5 to Previous Models

Early reports and public demos suggest GPT-5 outperforms GPT-4o and other recent models in speed, consistency, and instruction adherence. Some critics, however, note in user communities that in edge cases or highly specialized tasks, GPT-5 may still fall short of expert human output.

OpenAI acknowledges the model is not perfect—for example, it still can make factual errors, especially in poorly documented domains—but the frequency and severity of these issues are claimed to be lower than before.

Performance in Complex, High-Stakes Domains

Where the claims become most interesting is in domains like legal, healthcare, engineering, or finance—fields where mistakes carry consequences. If GPT-5 can reliably produce solid first drafts or suggestions in those areas, it would reshape task division in those professions. But full adoption will hinge on trust, auditability, and human oversight.

Implications for Jobs and Labor Markets

Augmentation Over Replacement

One likely scenario is augmentation rather than outright replacement. GPT-5 may serve as a powerful tool to assist professionals—drafting, researching, suggesting, or automating routine tasks—while humans retain final judgment, domain expertise, and accountability.

For example: a lawyer may use GPT-5 to compose a detailed brief, then edit, refine, and ensure legal compliance. A software engineer might have GPT-5 generate boilerplate code or prototypes, then fine-tune architecture and robustness.

Task-Level Disruption

OpenAI’s claims don’t necessarily imply whole jobs vanish. More likely, specific tasks within roles may shift heavily toward automation. Workers will need to adapt—focusing on oversight, critical thinking, domain depth, managing edge cases, and skills the AI can’t reliably handle.

Productivity & Cost Pressure

If GPT-5 truly delivers human-competitive outputs at lower cost (e.g. via API usage), it places significant pressure on organizations to adopt it. Firms may see gains in productivity, lower labor costs for certain tasks, and faster turnaround. This may accelerate adoption in sectors with tight margins or scaling pressure.

Inequality & Skill Polarization

As with other technologies, benefits may accrue disproportionately to those who can use, manage, or build with AI. Workers whose tasks are easily automatable may be most exposed, whereas those with deep domain knowledge, creative skills, interpersonal or high-stakes decision roles might remain safer. The gap between “AI-augmented professionals” and others may widen.

Policy & Ethical Considerations

Rapid advances raise policy questions: how to manage displacement, retraining, safety, liability when AI makes errors, and ensuring fairness in access to AI capabilities. Professional licenses and regulation may need updating to account for AI-assisted output.

Limitations, Risks & Caution

Hallucinations & Mistakes

No AI is immune to factual errors, especially with specialized or evolving knowledge. GPT-5 may present confidently but inaccurately. For critical domains like medicine or legal regulation, such risks must be mitigated.

Lack of Continuous Learning

OpenAI has noted that GPT-5 doesn’t continuously learn from deployment data (i.e. it doesn’t self-improve from each instance). That means it may lag behind domain changes unless updated versions are released.

Context, Judgment & Human Nuance

Human professionals add value not just through knowledge but through judgment, ethics, empathy, negotiation, and context awareness. Many tasks require reading nuance, ethics, and human relationships—areas where AI remains weak.

Access, Bias & Concentration

Access to GPT-5’s highest tiers may be limited by cost or API restrictions. If only certain institutions can afford top-level AI, concentration of power could increase. Also, bias or systemic flaws in training data may propagate errors or unfairness.

Overhype & Expectation Management

Technology is often marketed with bold claims before full maturity. Some earlier announcements about AI “replacing” jobs proved overblown in the short term. Users and organizations should temper expectations and pilot carefully.

What Industries Are Most Affected

-

Legal & Paralegal Work: Drafting documents, contracts, summaries, legal research

-

Software & Engineering: Boilerplate code, prototyping, unit tests, spec generation

-

Content & Media: Copywriting, summarization, initial drafts, localization

-

Financial Services: Report generation, modeling, compliance checks

-

Consulting & Strategy: Data analysis, trend summarization, scenario planning (although human judgment remains crucial)

Jobs heavily reliant on routine text, structure, or pattern may see the deepest changes. Roles requiring domain edge, human trust, or strategic oversight might resist full automation.

What Organizations Should Do Now

-

Pilot & experiment

Organizations should build safe pilot programs integrating GPT-5 into workflows under human supervision to assess benefits, pitfalls, and costs. -

Focus on re-skilling & hybrid roles

Emphasize training professionals to work with AI—skills like prompt engineering, oversight, auditing, and domain specialization. -

Implement audit & safety pipelines

Ensure that AI-generated outputs are verified, logged, and corrected. Systems should include human validation and correction loops. -

Revise job descriptions & restructure tasks

As some tasks shift to AI, roles may evolve toward supervision, exception handling, domain excellence, or augmentation. -

Monitor ethical, legal, and compliance readiness

Especially in regulated fields, organizations must ensure AI usage complies with regulations, liability frameworks, and professional standards. -

Prioritize equitable access

Avoid concentrates of AI capability—smaller teams or more diverse users should have pathways to benefit, not just well-funded firms.

What to Watch Going Forward

-

Independent audits and third-party benchmarks validating or contesting OpenAI’s performance claims

-

Domain-specific performance: whether GPT-5 handles medicine, law, science, engineering at expert levels

-

Adoption curves: which industries move faster, pilot failures or successes

-

Pricing and access models: will GPT-5 be broadly affordable or gated

-

Emergence of competitive models from other labs (Anthropic, Google, DeepMind) matching or surpassing GPT-5

OpenAI’s claim that GPT-5 can approach or match human performance across a broad range of job tasks sits at a critical inflection point for AI and work. If true, it could redefine how tasks are distributed in workplaces, accentuate hybrid human-AI roles, and push rapid rethinking of job structures.

However, such claims must be tested in real-world settings, not only in benchmarks. GPT-5 offers powerful tools, not guaranteed replacement. The future likely lies in augmentation: letting humans focus on judgment, ethics, nuance, and creativity, while AI manages structure, drafting, and scale.

For professionals, institutions, and policymakers, the time to prepare is now. Skills, oversight, reskilling, ethical frameworks, and cautious deployment will determine whether GPT-5’s capabilities empower humanity—or upend large parts of work without humanity in the loop.

-1706925458.jpg)

-1688629265x1024.jpg)